AV-Comparatives releases Malware Protection and Real-World tests

Here is an overview of the various tests that were released.

False Positive Test

https://www.av-comparatives.org/tests/false-alarm-test-march-2024/

The latest False Alarm Test from AV-Comparatives reveals that not all antivirus products are created equal when it comes to avoiding false positives. Some products generated very few false alarms, while others had a much higher rate. This can lead to frustration, unnecessary file deletions, and even system problems.

Key Points:

- False positives are a significant metric for assessing antivirus quality.

- Products with higher false alarms rates are likely to be less reliable and disruptive to users.

- FPs are a key quality metric: A lower FP rate indicates better overall antivirus performance.

- Vendor differences exist: Focus on the significant variation in FP numbers across tested products.

- Impact on user experience: Highlight how FPs disrupt workflows and erode trust in the antivirus software.

- Not just about malware detection: While stopping malware is crucial, reliable FP avoidance is equally important for a positive user experience.

Test Results:

Test results have shown that some vendors have improved upon their false positive rate while others increased their false positive count.

The results show that the top five products in this test are as follows:

- Kaspersky – with a total of three false positives.

- Trend Micro – with a total of three false positives.

- Bitdefender – with a total of eight false positives.

- Avast/AVG – with a total of ten false positives.

- ESET – with a total of ten false positives.

It is important to note that ESET traditionally has been one of the best performers on prior tests of this type. In the test conducted last September (2023), ESET scored second place along with Avast/AVG with only one false positive. This marks a sizeable shift in the current test that yielded ten false positives for both.

Meanwhile, Kaspersky has improved its ranking. In the September 2023 test, Kaspersky placed 6th place with six false positives. This is compared to just three in the April 2024 test. Bitdefender’s ranking improved, however its false positive count doubled from four (2023) to eight (2024). Trend Micro’s count increased from two to three, and placed second place (2024) instead of third (2023).

Additionally, the test results include supposed prevalence information on the samples; in other words how many users use the sample. These are categorized into five levels:

- Probably fewer than hundred users

- Probably several hundreds of users

- Probably several thousands of users

- Probably several tens of thousands (or more) of users

- Probably several hundreds of thousands or millions of users

| Vendor | Level 1 | Level 2 | Level 3 | Level 4 | Level 5 |

|---|---|---|---|---|---|

| Kaspersky | 1 | 1 | 1 | 0 | 0 |

| Trend Micro | 2 | 1 | 0 | 0 | 0 |

| Bitdefender | 3 | 4 | 0 | 0 | 1 |

| Avast/AVG | 6 | 4 | 0 | 0 | 0 |

| ESET | 6 | 3 | 1 | 0 | 0 |

| G Data | 4 | 5 | 1 | 0 | 0 |

| Avira | 6 | 6 | 0 | 0 | 0 |

| Microsoft Defender | 13 | 3 | 2 | 0 | 0 |

| McAfee | 11 | 3 | 4 | 1 | 0 |

| Norton | 18 | 7 | 1 | 0 | 0 |

| F-Secure | 18 | 11 | 2 | 2 | 0 |

| Panda Watchguard | 26 | 12 | 1 | 0 | 0 |

Malware Protection Test

The Malware Protection Test evaluates an antivirus product’s ability to detect and block malware executed from various sources like network drives, USB devices, or files already present on the system. Importantly, AV-Comparatives emphasizes that this test should be considered alongside other factors like price, usability, and vendor support when making a purchase decision.

Here’s a breakdown of the results:

- Detection Rate: This indicates how effectively an antivirus product identifies and blocks malware. A higher percentage signifies better protection.

- Offline Detection Rate: This shows how well the antivirus software detects malware when the computer is not connected to the internet.

- Online Detection Rate: This represents the antivirus product’s ability to detect malware while the computer is connected to the internet. This is generally considered more important as most malware threats are downloaded from the internet.

- Protection Rate: This indicates how effectively the antivirus software prevents malware from infecting the system.

- False Alarms: This represents the number of times the antivirus software mistakenly flags a safe file as malicious. Lower false alarm rates are preferable.

Here are some observations from the test results:

- Top Performers: Avast, Bitdefender, and Kaspersky seem to be at the top based on their detection rates across all categories.

- Offline vs Online: Generally, online detection rates are higher than offline rates, which reflects the antivirus software’s ability to download and utilize the latest threat definitions when connected to the internet.

- Offline detection in antivirus software is important for several reasons:

- Protection against Zero-Day Attacks: New malware strains and zero-day attacks often emerge before antivirus vendors can update their cloud-based detection databases. Offline detection capabilities help mitigate the risk by relying on locally stored signatures and heuristic analysis to identify suspicious behavior even without the latest updates.

- Protection in Isolated Environments: Computers that are not regularly connected to the internet, such as those in air-gapped networks or critical infrastructure, might not have access to the latest cloud-based threat intelligence. Offline detection ensures these systems still have a baseline level of protection.

- Faster Response During Outbreaks: During large-scale malware outbreaks, internet connectivity and vendor servers might become overloaded. Robust offline detection can help identify and block initial infections, slowing down the spread while cloud-based protection catches up.

- Protection Against Targeted Attacks: Sophisticated attackers might attempt to disable a victim’s internet connection to hinder communication with cloud-based antivirus services. Offline detection offers a layer of defense that’s more difficult to circumvent.

- Supplementing Online Protection: Offline detection acts as a safety net, catching potential threats that manage to bypass other layers of security. It provides an additional layer of protection even when the computer is connected to the internet.

- In Summary: While online detection with cloud-based protection is essential in today’s threat landscape, offline detection remains a crucial component of a comprehensive antivirus solution. It enhances protection in situations where up-to-the-minute updates aren’t readily available and offers an added layer of security even in regular online use.

- Offline detection in antivirus software is important for several reasons:

- Avast/AVG, Bitdefender, ESET, G DATA, and Kaspersky received the highest reward of Advanced+ certification.

- Avira, McAfee, Microsoft Defender, TotalAV, and Total Defense received the second highest reward of Advanced. These products got a lower rating due to false positives.

Overall:

These results provide a general overview of how different antivirus products performed in detecting malware in March 2024. This test is very useful when evaluating your anti-virus solution.

Details on AV-Comparatives: https://www.av-comparatives.org/tests/malware-protection-test-march-2024/

Real-World Protection Test

AV-Comparatives’ Real-World Protection Test is a rigorous assessment of antivirus software’s ability to defend users against real-world threats encountered while browsing the web. The test focuses on current, active malicious URLs, including exploits and direct malware links, mirroring typical user experience. Since the test environment uses an updated Windows 10 system with patched third-party software, it highlights the importance of keeping systems up-to-date to reduce vulnerability to exploits. The test involves filtering and validating a large pool of malicious URLs, resulting in a final set of truly dangerous live test cases. The evaluation includes a wide range of security products, testing their full range of protection features in a realistic web browsing scenario.

Test Results – Green being the best results and red being the worst

| Compromised % | User Dependent % | Blocked % | False Positives | Vendor |

|---|---|---|---|---|

| 0.00% | 0.00% | 100.00% | 5 | Avast/AVG |

| 0.40% | 0.00% | 99.60% | 0 | Avira |

| 0.40% | 0.00% | 99.60% | 1 | Bitdefender |

| 1.20% | 0.00% | 98.80% | 0 | ESET |

| 0.00% | 0.00% | 100.00% | 7 | F-Secure |

| 1.20% | 0.00% | 98.80% | 2 | G DATA |

| 0.00% | 0.00% | 100.00% | 0 | Kaspersky |

| 0.40% | 0.00% | 99.60% | 3 | McAfee |

| 1.20% | 0.00% | 98.80% | 3 | Microsoft Defender |

| 0.40% | 0.40% | 99.20% | 9 | Norton |

| 1.20% | 0.00% | 98.80% | 7 | Panda |

| 6.50% | 0.00% | 93.50% | 5 | Quick Heal |

| 1.20% | 0.00% | 98.80% | 0 | Total Defense |

| 2.00% | 0.00% | 98.00% | 0 | Total AV |

| 2.00% | 0.00% | 99.60% | 20 | Trend Micro |

Observations:

- Trend Micro had the worst false positive count with twenty total.

- AVAST/AVG, F-Secure, and Kaspersky had the lowest compromised percentages.

- Norton was the only vendor with a user dependent percentage greater than 0% (0.4%).

- AVAST/AVG, F-Secure, and Kaspersky all had the highest blocked percentages (100%).

- Avira, Bitdefender, McAfee, and Trend Micro had the second highest blocked percentages (99.60%).

Details on AV-Comparatives: https://www.av-comparatives.org/tests/real-world-protection-test-feb-mar-2024-factsheet/

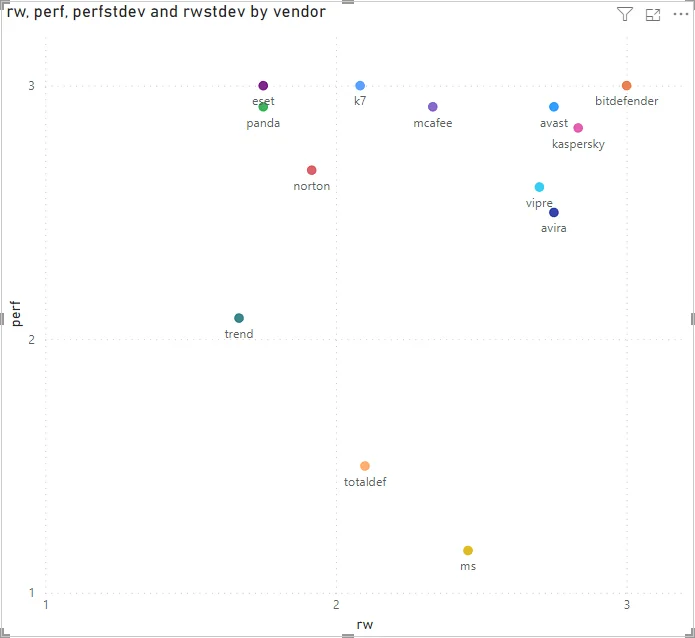

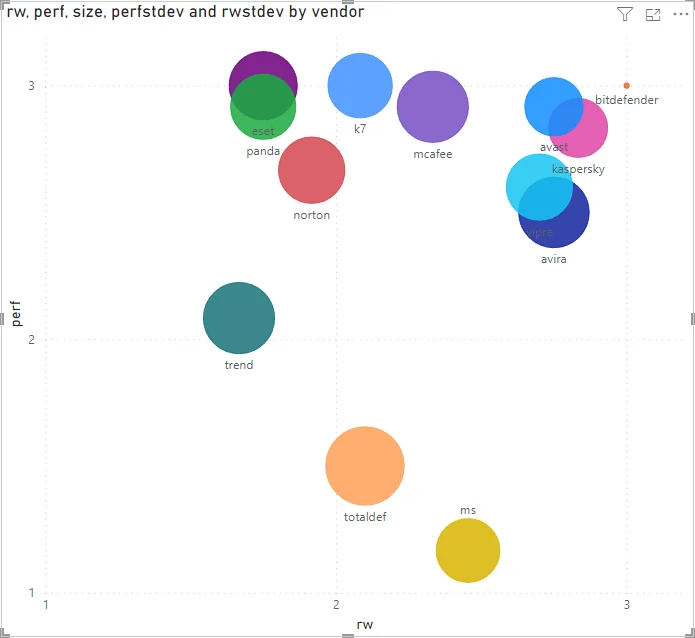

Analyzing AV-Comparatives results over the last 5 years

I compared the most popular AVs with results from AV-Comparatives‘ Real-World Protection and Performance test series in the period of 2018-2023. Awards are converted to points (Advanced+ = 3, Advanced = 2, Standard = 1, Tested = 0) and statistics taken. The Real-World Protection Test takes into account both detection rates and FP rates.

Microsoft Defender did not participate in the Real-World Protection Feb-May 2021 test; Vipre did not participate in 2023 tests. Total Defense did not participate in 2018 tests. These were not included in the average scores.

Results are below. Feel free to draw your own conclusions. Note that I only analyzed results from AV-Comparatives, another testing firm may come up with completely different results due to different methodologies.

Real-World Protection

| Vendor | Average | St.Dev |

|---|---|---|

| Bitdefender | 3.00 | 0.00 |

| Kaspersky | 2.83 | 0.39 |

| Avast | 2.75 | 0.45 |

| Avira | 2.75 | 0.62 |

| Vipre | 2.70 | 0.48 |

| Windows Defender (MS) | 2.45 | 0.52 |

| Mcafee | 2.33 | 0.78 |

| Total Defense | 2.10 | 0.74 |

| K7 Antivirus | 2.08 | 0.67 |

| Norton | 1.92 | 0.51 |

| ESET | 1.75 | 0.75 |

| Panda | 1.75 | 0.62 |

| Trend | 1.67 | 0.49 |

Performance Comparison

| Vendor | Average | St.Dev |

|---|---|---|

| Bitdefender | 3.00 | 0.00 |

| K7 Antivirus | 3.00 | 0.00 |

| ESET | 3.00 | 0.00 |

| Panda | 2.92 | 0.29 |

| Avast | 2.92 | 0.29 |

| Mcafee | 2.92 | 0.29 |

| Kaspersky | 2.83 | 0.39 |

| Norton | 2.67 | 0.49 |

| Vipre | 2.60 | 0.52 |

| Avira | 2.50 | 0.52 |

| Trend | 2.08 | 0.67 |

| Total Defense | 1.50 | 0.71 |

| Windows Defender (MS) | 1.17 | 0.39 |

Scatterplot

It’d be interesting to have a price comparison of these products (MSRP and market price).